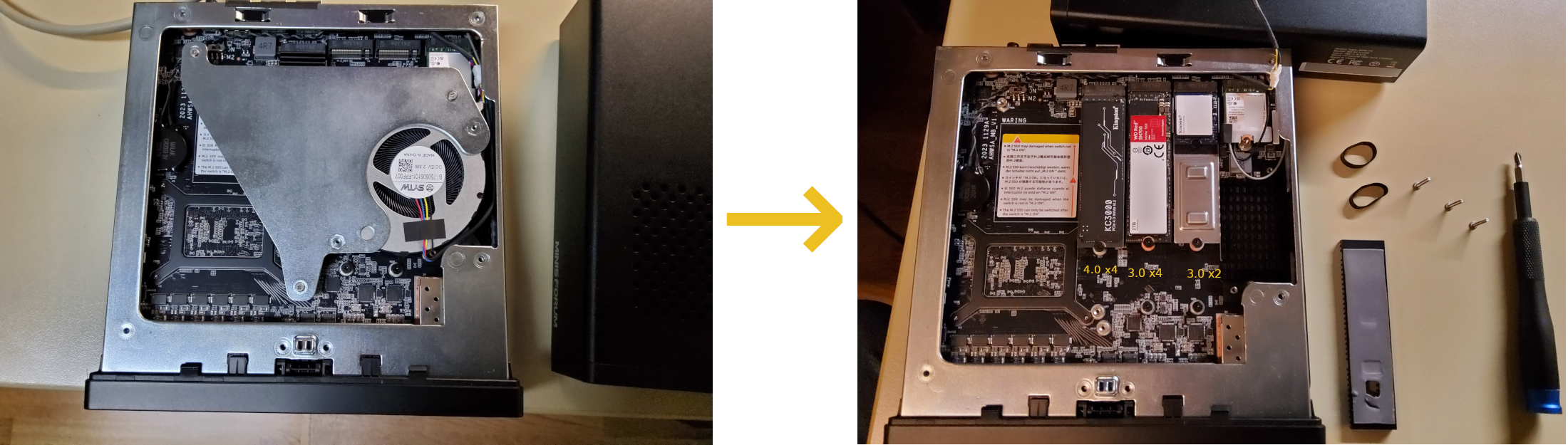

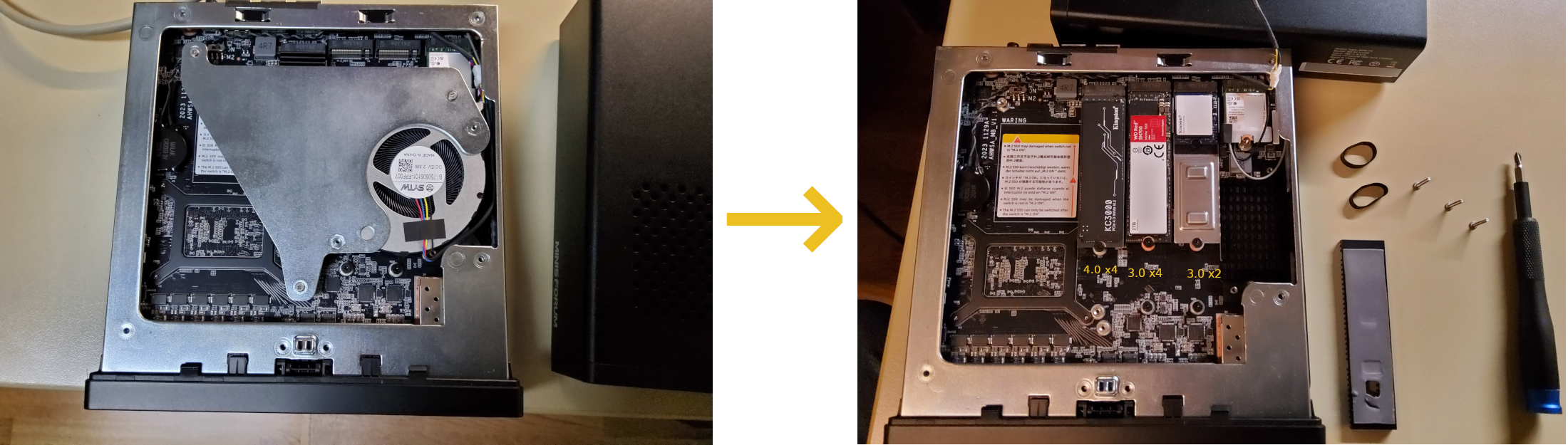

Minisforum MS-01 Work Station, 3 NVMe per host

These are my notes on adding 2 NVMe to my Intel Core i9-13900H / 32GB RAM + 1TB SSD bundle nodes. In the process, the bundled 1TB NVMe has the cooler removed and is moved to the 3.0 x2 slot.

These are my notes on adding 2 NVMe to my Intel Core i9-13900H / 32GB RAM + 1TB SSD bundle nodes. In the process, the bundled 1TB NVMe has the cooler removed and is moved to the 3.0 x2 slot.

When I built my Ceph cluster in March 2020, I used old SATA HDDs I still had on the shelf. It was always clear that at some point these old consumables would die and need replacing. That time has come and I went with 3x 2TB SATA SSD OSD per node.

Just a short write-up on the state of my TerraMaster F5-433 Ceph cluster now that it has been in use for about 3 years.

This is my braindump on installing cephadm on CentOS Stream 9.

While I use a QNAP TS-473A for my tests plus 2 VMs, the below applies to any machine or VM running CentOS Stream 9 FWIW: My installation and initial configuration is described in the post QNAP TS-473A with CentOS Stream 9.

I want a playground for Ceph’s cephadm that was introduced with Octopus and is also present in Pacific.

So I cleanly took my QNAP TS-473A out of my existing Ceph Nautilus cluster again

(because I have enough combined capacity on my F5-422 nodes to be able to remove the OSDs in the TS-473A)

and installed Fedora Server 35 plus cephadm from upstream.

I purchased a QNAP TS-473. This comes with a AMD Ryzen Embedded V1500B 4-core/8-thread @ 2.2 GHz CPU.

These are my notes from initial bringup.

This is my braindump of using virt-resize to migrate 3 OpenShift 4 master VMs’ qcow2 disk files, on a CentOS 7 hypervisor,

from 70G each in one libvirt storage pool to 150G each in another pool.

This is my braindump of shrinking the existing 1TB LVM cache (on a Samsung 960evo) to half size and using the freed up space to host qcow2 files used by our OpenShift 4 VMs

This is a quick braindump of setting up our home GitLab instance (on CentOS 8) to backup to an existing Ceph Object Gateway using the S3 API.

user@workstation tmp $ s3cmd --access_key=FOO --secret_key=BAR ls s3://gitlab-backup/

2020-09-09 19:28 686305280 s3://gitlab-backup/1599679709_2020_09_09_13.3.5-ee_gitlab_backup.tar

this is my braindump on setting up NFS-Ganesha to serve 3 separate directories on my CephFS using 3 separate cephx users.

This is a quick braindump of setting up our home GitLab instance (on CentOS 8) to backup

to an existing CephFS but with access limited to the directory /GitLab_backup.

[root@gitlab ~]# df -h /mnt/cephfs-GitLab_backup/

Filesystem Size Used Avail Use% Mounted on

ceph-fuse 5.3T 175G 5.1T 4% /mnt/cephfs-GitLab_backup