Minisforum MS-01 Work Station, 3 NVMe per host

Table of Contents

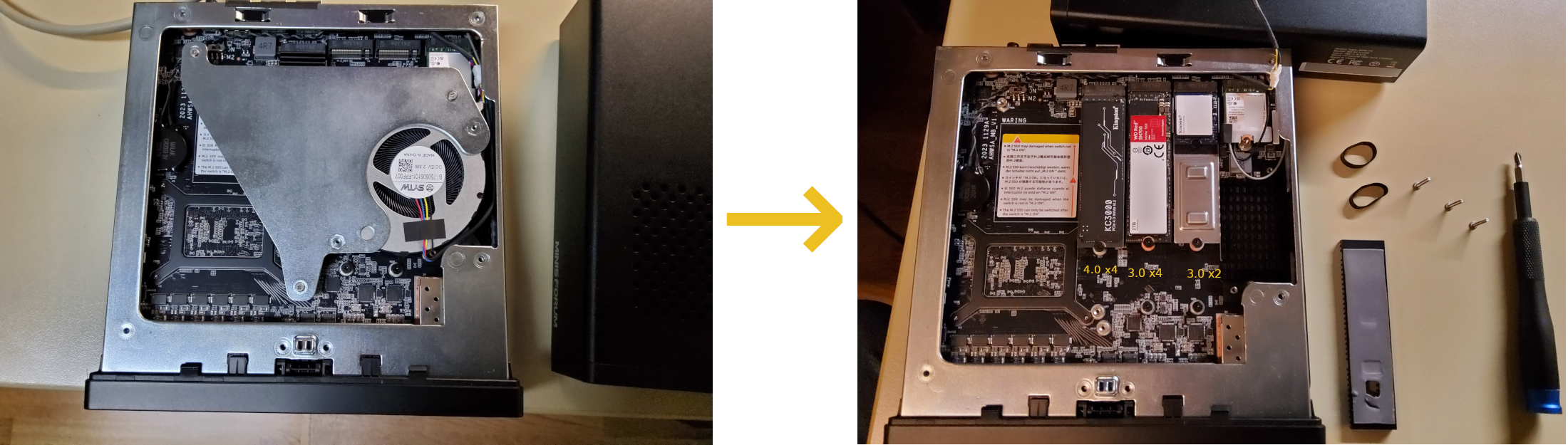

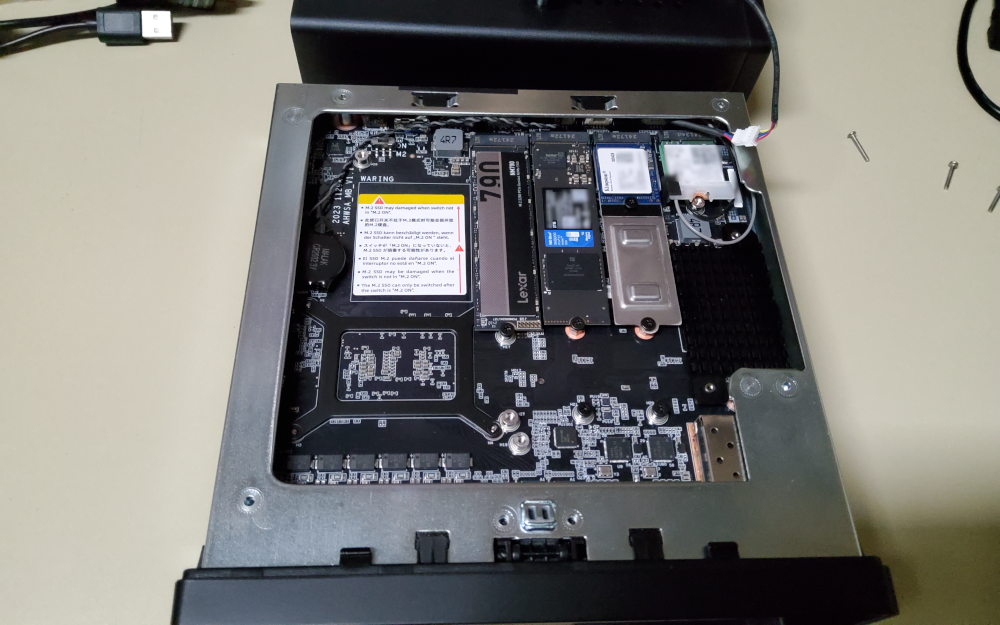

These are my notes on adding 2 NVMe to my Intel Core i9-13900H / 32GB RAM + 1TB SSD bundle nodes. In the process, the bundled 1TB NVMe has the cooler removed and is moved to the 3.0 x2 slot.

The two faster M.2 slots (one 4.0 x4 and one 3.0 x4) will be used for Ceph OSDs.

M.2 slots to /dev/disk/by-path mappings

From left to right, where left is the slot closest to the U.2 | M.2 power toggle switch and right is closest to the WiFi/BT M.2 card

| location | PCIe | length | disk by path |

|---|---|---|---|

| left | 4.0 x4 | 2280 or U.2 | /dev/disk/by-path/pci-0000:01:00.0-nvme-1 |

| middle | 3.0 x4 | 2280 / 22110 | /dev/disk/by-path/pci-0000:58:00.0-nvme-1 |

| right | 3.0 x2 | 2280 / 22110 | /dev/disk/by-path/pci-0000:59:00.0-nvme-1 |

https://store.minisforum.de/en/blogs/blogartikels/ms-01-work-station has a picture (that information would have been great in the User Manual or printed somewhere visible after removing the motherboard case).

The leftmost slot is the one that can function as U.2 or M.2

click to see relevant parts of /dev/disk/by-id/… and /dev/disk/by-path/…

[root@ms-01-01 ~]# ls -l /dev/disk/by-id/nvme-*

[…]

[…] /dev/disk/by-id/nvme-KINGSTON_OM3PGP41024P-A0_[…] -> ../../nvme1n1

[…] /dev/disk/by-id/nvme-KINGSTON_SKC3000D2048G_[…] -> ../../nvme2n1

[…] /dev/disk/by-id/nvme-WD_Red_SN700_2000GB_[…] -> ../../nvme0n1

[root@ms-01-01 ~]# ls -l /dev/disk/by-path/

[…]

[…] pci-0000:01:00.0-nvme-1 -> ../../nvme2n1

[…] pci-0000:58:00.0-nvme-1 -> ../../nvme0n1

[…] pci-0000:59:00.0-nvme-1 -> ../../nvme1n1

[…]

[root@ms-01-01 ~]#

The important bit here for me is to not feed the kickstart file /dev/nvme…n1,

but rather use /dev/disk/by-path/pci-0000:59:00.0-nvme-1

because I want to ensure I write the OS to the (1TB) NVMe that I moved to the 3.0 x2 slot.

Do not

The the other two, faster, slots have been given each a 2TB NVMe for Ceph OSD use.

Size of included mounted NVMe cooler

The cooler fitted to the bundled 1 TB NVMe gives it a massive look, adding about 6mm to the nvme height and about 1mm at the bottom and is longer than a 2280 NVMe alas in the 3.0 x2 slot, the fan assembly is in the way, so I removed it.

The shipped 1TB NVMe itself is a short one (2230), so I kept it in the tray to be able to mount it in the 3.0 x2 2280 / 22110 slot.

The cooler only fits if mounted to the NVMe in the 4.0 x4 slot (the left hand one, close to the U.2 | M.2 power toggle switch). In the other two the fan assembly is in the way. And with the dents that the 2230 to 2280 tray left I do not plan to re-use them.

My monitoring will tell me if the NVMe in the 4.0 x4 slot gets too hot, should heat become an issue then I will look at coolers again.

One NIC changed name, enp88s0 → enp90s0

After filling all M.2 slots, the NIC closest to the SFP+ changed name.

enp88s0 → enp90s0 but that is not unexpected and was dealt with by

adjusting the kickstart file and the network boot target followed by

kickstarting the nodes again.

Wanted to retest the kickstart files after the NVMe addition anyway.

Click for more details.

ToDo: maybe do some more fancy ks send mac of booting interface faff? Undecided for now. Bringing up Ceph Squid on the five nodes is the current priority. Plus if I ever do re-kickstart in the future (e.g. after replacing the NVMe containing the OS after it inevitably died of old age), then I will have 3 NVMe present in the node.

node 02 after firmware upgrade but before NVMe addition

interface names still as during install, even after applying all updates, which includes a new kernel.

[root@ms-01-02 ~]# dmidecode -t bios|grep Version

Version: 1.26

[root@ms-01-02 ~]# ip -c a s

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

[…]

2: enp87s0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether [REDACTED] brd ff:ff:ff:ff:ff:ff

3: enp88s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether [REDACTED] brd ff:ff:ff:ff:ff:ff

[…]

4: enp2s0f0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether [REDACTED] brd ff:ff:ff:ff:ff:ff

5: enp2s0f1: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether [REDACTED] brd ff:ff:ff:ff:ff:ff

6: wlp89s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether [REDACTED] brd ff:ff:ff:ff:ff:ff

[root@ms-01-02 ~]# uname -r

5.14.0-542.el9.x86_64

node 02 after NVMe addition

After filling all NVMe slots, I have again enp88s0 → enp90s0, like I had on node 01.

[root@ms-01-02 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sr0 11:0 1 1024M 0 rom

nvme0n1 259:0 0 1.8T 0 disk

nvme1n1 259:1 0 1.8T 0 disk

nvme2n1 259:2 0 953.9G 0 disk

├─nvme2n1p1 259:3 0 600M 0 part /boot/efi

├─nvme2n1p2 259:4 0 1G 0 part /boot

└─nvme2n1p3 259:5 0 200G 0 part

├─VG_OS-LV_root 253:0 0 4G 0 lvm /

├─VG_OS-LV_swap 253:1 0 1G 0 lvm [SWAP]

├─VG_OS-LV_var_crash 253:2 0 40G 0 lvm /var/crash

├─VG_OS-LV_var 253:3 0 12G 0 lvm /var

├─VG_OS-LV_home 253:4 0 1G 0 lvm /home

├─VG_OS-LV_var_log 253:5 0 10G 0 lvm /var/log

└─VG_OS-LV_containers 253:6 0 10G 0 lvm /var/lib/containers

[root@ms-01-02 ~]# ip -c a s

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

[…]

2: enp87s0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether [REDACTED] brd ff:ff:ff:ff:ff:ff

3: enp90s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether [REDACTED] brd ff:ff:ff:ff:ff:ff

[…]

4: enp2s0f0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether [REDACTED] brd ff:ff:ff:ff:ff:ff

5: enp2s0f1: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether [REDACTED] brd ff:ff:ff:ff:ff:ff

6: wlp91s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether [REDACTED] brd ff:ff:ff:ff:ff:ff

Action, re-kickstart

This name change is not utterly surprising and while I could deal with this, I need to know anyway if the nodes in the Ceph built can be kickstarted for the day the 1TB OS holding SSD dies. cattle, not pets and all that.

I adjusted the one kickstart file I use for all 5 nodes as follows

[…]

# Network information, all switch ports have the respective VLAN as native

# WiFi

network --bootproto=dhcp --device=wlp91s0 --onboot=off --ipv6=auto --no-activate

# 2.5 GbE close to Thunderbolt ports (will go on 'storage' via ansible)

network --bootproto=dhcp --device=enp87s0 --onboot=off --ipv6=auto --no-activate

# 2.5 GbE close to SFP+ ('access' network)

network --bootproto=dhcp --device=enp90s0 --ipv6=auto --activate

# SFP+ port left, near case edge (will go on 'ceph' via ansible)

network --bootproto=dhcp --device=enp2s0f0 --onboot=off --ipv6=auto --no-activate

# SFP+ port right, near 2.5 GbE ports

network --bootproto=dhcp --device=enp2s0f1 --onboot=off --ipv6=auto --no-activate

[…]

On my netboot server in /var/lib/tftpboot/uefi/grub.cfg, I now have

[…]

menuentry 'Kickstart CentOS Stream 9 on minisforum MS-01 for Ceph use' --class fedora --class gnu-linux --class gnu --class os {

linuxefi images/CentOS-Stream-9-20241209.0-x86_64/vmlinuz inst.kdump_addon=on ip=enp90s0:dhcp inst.repo=http://fileserver.internal.pcfe.net/ftp/distributions/CentOS/9-stream/DVD/x86_64 inst.ks=ftp://fileserver.internal.pcfe.net/pub/kickstart/CentOSstream9-x86_64-ceph-on-ms-01-ks.cfg

initrdefi images/CentOS-Stream-9-20241209.0-x86_64/initrd.img

}

[…]

All five nodes done

node 01

node 02

node 03

node 04

node 05