WIP: SoftIron OverDrive 1000

Table of Contents

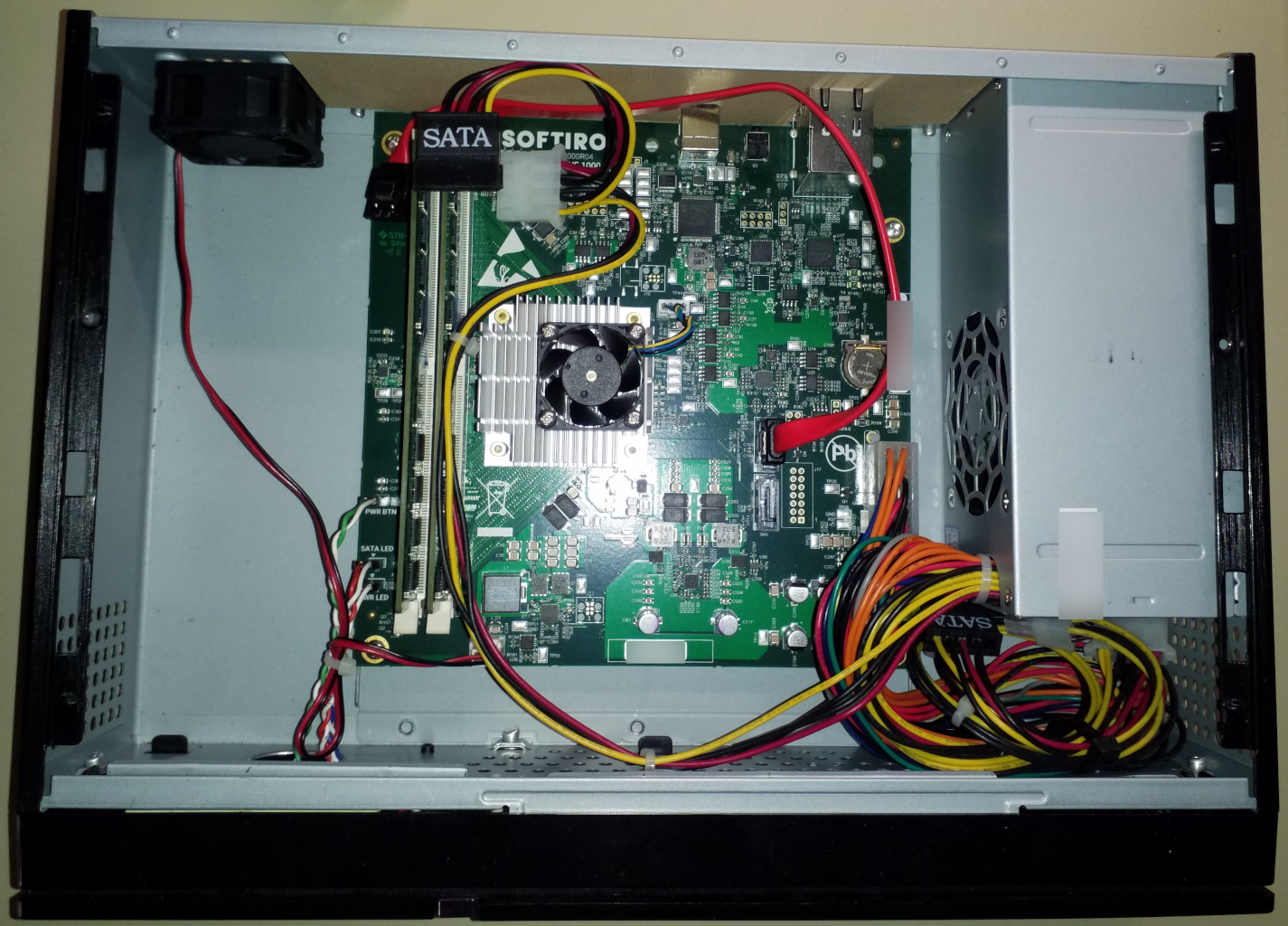

I was gifted a SoftIron OverDrive 1000, an ARMv8 Opteron Seattle based machine.

This post is my braindump. Expect it to change until I remove ‘WIP: ’ from the title.

Overview

A colleague recently gave me this aarch64 box he had no longer a use for.

It is much nicer than my ODROID-HC2 boards because;

- it’s 64bit

- it has UEFI

- it has serial console directly on the motherboard

- it has 2 SATA ports

Hardware Specifications

From the Printed Manual

- AMD Opteron-A SoC with 4 ARM Cortex-A57 cores,

- 8 GB DDR4 RAM,

- 1x Gigabit Ethernet,

- 2x USB 3.0 SuperSpeed host ports,

- 2x SATA 3.0 ports,

- 1 TB hard drive,

- USB Console port.

From the Shell

CPU

[root@overdrive-1000 ~]# lscpu

Architecture: aarch64

Byte Order: Little Endian

CPU(s): 4

On-line CPU(s) list: 0-3

Thread(s) per core: 1

Core(s) per socket: 2

Socket(s): 2

NUMA node(s): 1

Vendor ID: ARM

Model: 2

Model name: Cortex-A57

Stepping: r1p2

BogoMIPS: 500.00

NUMA node0 CPU(s): 0-3

Flags: fp asimd evtstrm aes pmull sha1 sha2 crc32 cpuid

Memory

[root@overdrive-1000 ~]# free -h

total used free shared buff/cache available

Mem: 7.8Gi 304Mi 5.6Gi 0.0Ki 1.9Gi 7.4Gi

Swap: 511Mi 0B 511Mi

Hardware Modifications

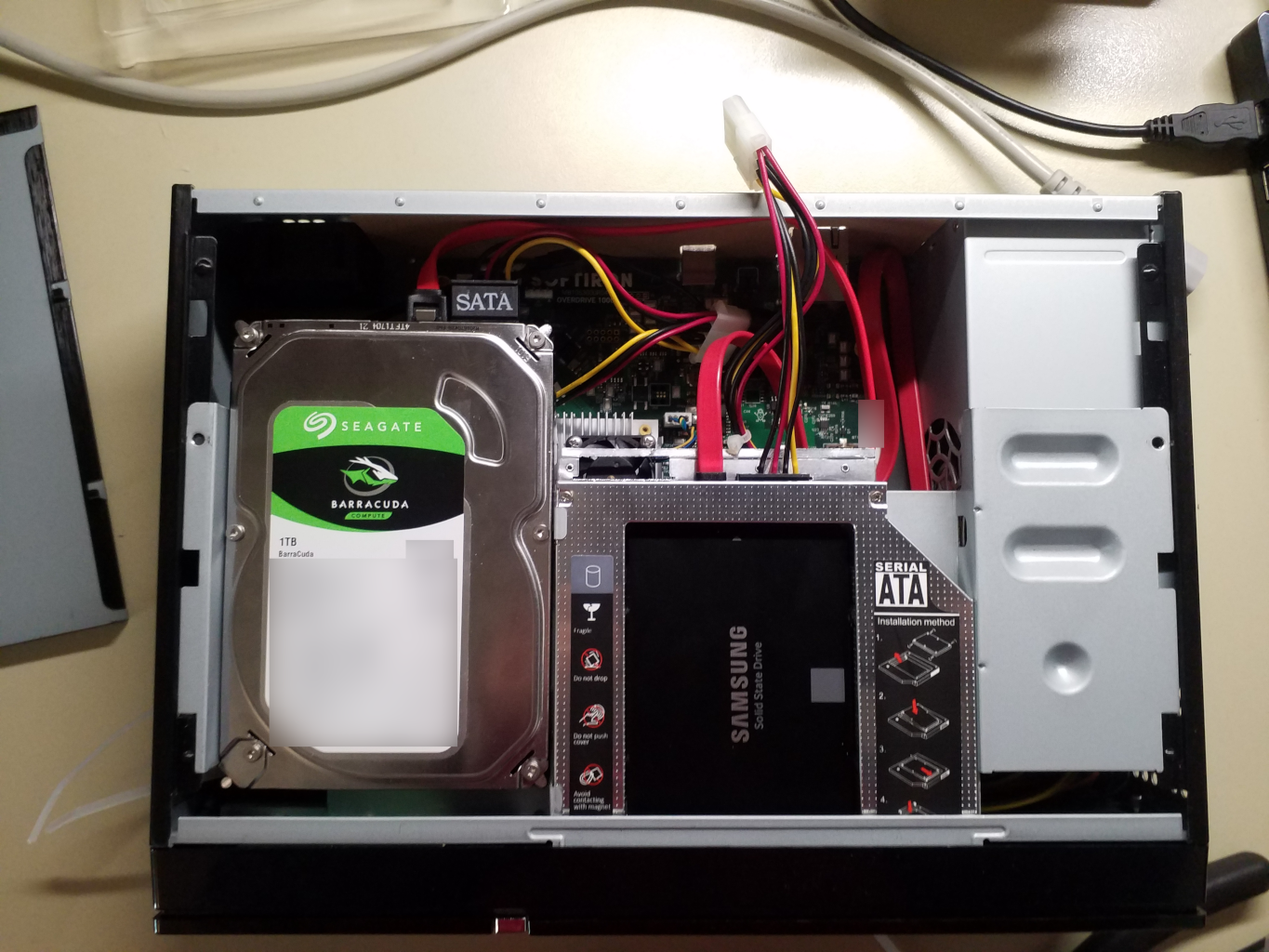

Added an SSD

Since the motherboard has 2 SATA ports and only one HDD was connected, I purchased a Samsung 860 Evo 2.5-Inch SSD of 500 GB and a 2nd HDD Caddy for 2.5 inch SATA to SATA ODD or SSD Hard Drive Caddy Case for 12.7mm Universal Laptop Optical Bay CD / DVD-ROM.

The latter was relieved of it’s adapter board and LED, followed by modding adapter case with pliers to allow direct connection to the SSD.

Replaced the case fan

Since the box had no IO shield when I got it, I added some cardboard to improve airflow. Still, with the case closed, the HDD would report the following temperatures; Max: 46°C Avg: 42°C

So I replaced the case fan with a Noctua NF A4X20 FLX.

Now I get; Max: 40°C Avg: 37°C

Fedora 29 on the OverDrive 1000

My first install was Fedora 29, installed fine without any issues.

CentOS 7 on the OverDrive 1000

After Fedora 29, I gave CentOS 7 a spin.

CentOS 7.5

Installed fine. But when I applied the following 2 updates;

mokutil.aarch64 15-1.el7.centos base

shim-aa64.aarch64 15-1.el7.centos base

the machine would no longer boot. I filed a bug and will continue following up on that.

CentOS 7.6

7.6 install media do not work because of this bug. As work-around for the time being, I started with a CentOS 7.5 install and on upgrading I exclude the affected packages

yum upgrade --exclude=shim-aa64 --skip-broken

until the bug is fixed.

Initial Setup with Ansible

pcfe@karhu pcfe.net (master) $ ansible-playbook -i ../inventories/ceph-ODROID-cluster.ini -l overdrive-1000 arm-fedora-initial-setup.yml

The used playbook reads as follows;

# initially sets up my ARM based boxes

# you can run this after completing the steps at

# https://blog.pcfe.net/hugo/posts/2019-01-27-fedora-29-on-odroid-hc2/

#

# this also works for boxes installed with

# Fedora-Server-dvd-aarch64-29-1.2.iso

#

# this initial setup Playbook must connect as user root,

# after it ran we can connect as user ansible.

# since user_owner is set (in vars: below) to 'ansible',

# pcfe.user_owner creates the user 'ansible' and drops in ssh pubkeys

#

# this is for my ODROID-HC2 boxes and my OverDrive 1000

#

- hosts:

- odroids

- softiron

- f5-422-01

become: no

roles:

- pcfe.user_owner

- pcfe.basic_security_setup

- pcfe.housenet

vars:

ansible_user: root

user_owner: ansible

tasks:

# should set hostname to ansible_fqdn

# https://docs.ansible.com/ansible/latest/modules/hostname_module.html

# F31 RC no longer seet to set it...

# debug first though

# start by enabling time sync, while my ODROIDs do have the RTC battery add-on, yours might not.

# Plus it's nice to be able to wake up the boards from poweroff

# and have the correct time alredy before chrony-wait runs at boot

- name: "CHRONYD | ensure chrony-wait is enabled"

service:

name: chrony-wait

enabled: true

- name: "CHRONYD | ensure chronyd is enabled and running"

service:

name: chronyd

enabled: true

state: started

# enable persistent journal

# DAFUQ? re-ran on all odroids, it reported 'changed' instead of 'ok'?!?

- name: "JOURNAL | ensure persistent logging for the systemd journal is possible"

file:

path: /var/log/journal

state: directory

owner: root

group: systemd-journal

mode: 0755

# enable passwordless sudo for the created ansible user

- name: "SUDO | enable passwordless sudo for ansible user"

copy:

dest: /etc/sudoers.d/ansible

content: |

ansible ALL=NOPASSWD: ALL

owner: root

group: root

mode: 0440

# I do want all errata applied

- name: "DNF | ensure all updates are applied"

dnf:

update_cache: yes

name: '*'

state: latest

tags: apply_errata

General Setup with Ansible

pcfe@karhu pcfe.net (master) $ ansible-playbook -i ../inventories/ceph-ODROID-cluster.ini softiron-general-setup.yml

The used playbook reads as follows;

# sets up a Fedora 29 ARM minimal install

# or a CentOS 7 ARM install

# with site-specific settings

# to be run AFTER arm-fedora-initial-setup.yml RAN ONCE at least

#

# this is for my SoftIron OverDrive 1000 box

- hosts:

- softiron

become: yes

roles:

- linux-system-roles.network

- pcfe.basic_security_setup

- pcfe.user_owner

- pcfe.comfort

- pcfe.checkmk

tasks:

# # linux-system-roles.network sets static network config (from host_vars)

# # but I want the static hostname nailed down too

# # the below does not work though, try with ansible_fqdn instead

# - name: "set hostname"

# hostname:

# name: '{{ ansible_hostname }}.internal.pcfe.net'

# FIXME, only do the below task on Fedora 29

# # fix dnf's "Failed to set locale, defaulting to C" annoyance

# - name: "PACKAGE | ensure my preferred langpacks are installed"

# package:

# name:

# - langpacks-en

# - langpacks-en_GB

# - langpacks-de

# - langpacks-fr

# state: present

- name: "FIREWALLD | ensure check-mk-agenmt is allowed in zone public"

firewalld:

port: 6556/tcp

permanent: true

zone: public

state: enabled

immediate: true

# enable watchdog

# it's a Jun 22 13:12:09 localhost kernel: sbsa-gwdt e0bb0000.gwdt: Initialized with 10s timeout @ 250000000 Hz, action=0.

- name: "WATCHDOG | ensure kernel module sbsa_gwdt has correct options configured"

lineinfile:

path: /etc/modprobe.d/sbsa_gwdt.conf

create: true

regexp: '^options '

insertafter: '^#options'

line: 'options sbsa_gwdt timeout=30 action=1 nowayout=0'

# while testing, configure both watchdog.service and systemd watchdog, but only use the latter for now.

- name: "PACKAGE | ensure watchdog package is installed"

package:

name: watchdog

state: present

- name: "WATCHDOG | ensure correct watchdog-device is used by watchdog.service"

lineinfile:

path: /etc/watchdog.conf

regexp: '^watchdog-device'

insertafter: '^#watchdog-device'

line: 'watchdog-device = /dev/watchdog'

- name: "WATCHDOG | ensure timeout is set to 30 seconds for watchdog.service"

lineinfile:

path: /etc/watchdog.conf

regexp: '^watchdog-timeout'

insertafter: '^#watchdog-timeout'

line: 'watchdog-timeout = 30'

# install and enable rngd

- name: "PACKAGE | ensure rng-tools package is installed"

package:

name: rng-tools

state: present

- name: "RNGD | ensure rngd.service is enabled and started"

systemd:

name: rngd.service

state: started

enabled: true

# testing in progress;

# Using systemd watchdog rather than watchdog.service

# the box stays up, I see logged

# Mar 6 11:13:01 localhost kernel: sbsa-gwdt e0bb0000.gwdt: Initialized with 30s timeout @ 250000000 Hz, action=1.

# but when I forcefully crash the box, it does not reboot.

# needs investigating

- name: "WATCHDOG | Ensure watchdog.service is disabled"

systemd:

name: watchdog.service

state: stopped

enabled: false

# configure systemd watchdog

# c.f. http://0pointer.de/blog/projects/watchdog.html

- name: "SYSTEMD | ensure systemd watchdog is enabled"

lineinfile:

path: /etc/systemd/system.conf

regexp: '^RuntimeWatchdogSec'

insertafter: 'EOF'

line: 'RuntimeWatchdogSec=30'

- name: "SYSTEMD | ensure systemd shutdown watchdog is enabled"

lineinfile:

path: /etc/systemd/system.conf

regexp: '^ShutdownWatchdogSec'

insertafter: 'EOF'

line: 'ShutdownWatchdogSec=30'

Ceph Preparations with Ansible

pcfe@karhu pcfe.net (master) $ ansible-playbook -i ../inventories/ceph-ODROID-cluster.ini ceph-prepare-arm.yml -l overdrive-1000

The used playbook reads as follows;

This file was removed from my git repo because it's been replaced by another.

FIXME: update blog post

Partition with ansible

Since I plan to use the OverDrive as an OSD host in my Ceph Luminous Cluster, I’ve set up LVM as follows;

pcfe@karhu pcfe.net (master) $ ansible-playbook -i ../inventories/ceph-ODROID-cluster.ini softiron-prep-disks.yml

The used playbook reads as follows;

# sets partitions on my SoftIron OverDrive 1000

# OS is sinstalled in a VG_OD1000 of 60 GBiB on the SSD

# HDD is unused

- hosts:

- overdrive-1000

become: yes

# inspired by https://www.epilis.gr/en/blog/2017/08/09/extending-root-fs-whole-farm/

tasks:

- name: "PARTITIONS | get partition information of SSD (sda)"

parted:

device: /dev/sda

register: sda_info

# - debug: var=sda_info

- block:

- name: "PARTITIONS | if more than 100 MiB space left on the SSD, then create a new partition after {{sda_info.partitions[-1].end}}KiB"

parted:

part_start: "{{sda_info.partitions[-1].end}}KiB"

device: /dev/sda

number: "{{sda_info.partitions[-1].num + 1}}"

flags: [ lvm ]

label: gpt

state: present

- name: "PARTITIONS | partprobe after change to /dev/sda"

command: partprobe

- name: "LVM | create VG_Ceph_SSD_01 using PV /dev/sda{{ sda_info.partitions[-1].num + 1 }}"

lvg:

vg: VG_Ceph_SSD_01

pvs: "/dev/sda{{ sda_info.partitions[-1].num + 1 }}"

- name: "LVM | create LV_Ceph_SSD_OSD_01 in VG_Ceph_SSD_01"

lvol:

vg: VG_Ceph_SSD_01

lv: LV_Ceph_SSD_OSD_01

size: 100%FREE

when: (sda_info.partitions[-1].end + 102400) < sda_info.disk.size

- name: "PARTITIONS | get partition information of the HDD (sdb)"

parted:

device: /dev/sdb

register: sdb_info

# - debug: var=sdb_info.partitions

- block:

- name: "PARTITIONS | if no partitions on the HDD (sdb), then create one covering whole disk"

parted:

part_start: "0%"

part_end: "100%"

device: /dev/sdb

number: 1

flags: [ lvm ]

label: gpt

state: present

- name: "PARTITIONS | partprobe after change to /dev/sdb"

command: partprobe

- name: "LVM | create VG_Ceph_HDD_01 using PV /dev/sdb1"

lvg:

vg: VG_Ceph_HDD_01

pvs: "/dev/sdb1"

- name: "LVM | create LV_Ceph_HDD_OSD_01 in VG_Ceph_HDD_01"

lvol:

vg: VG_Ceph_HDD_01

lv: LV_Ceph_HDD_OSD_01

size: 100%FREE

when: not sdb_info.partitions|length

- name: "PARTITIONS | get partition information of the HDD (sdb) again"

parted:

device: /dev/sdb

register: sdb_info

# - debug: var=sdb_info.partitions

- block:

- name: "PARTITIONS | if more than 1 GiB space left on sdb, then create a new partition after {{sdb_info.partitions[-1].end}}KiB"

parted:

part_start: "{{sdb_info.partitions[-1].end}}KiB"

device: /dev/sdb

number: "{{sdb_info.partitions[-1].num + 1}}"

flags: [ lvm ]

label: gpt

state: present

- name: "PARTITIONS | partprobe after change to /dev/sdb"

command: partprobe

- name: "LVM | create VG_Ceph_HDD_01 using PV /dev/sdb{{ sdb_info.partitions[-1].num + 1 }}"

lvg:

vg: VG_Ceph_HDD_01

pvs: "/dev/sdb{{ sda_info.partitions[-1].num + 1 }}"

- name: "LVM | create LV_Ceph_HDD_OSD_01 in VG_Ceph_HDD_01"

lvol:

vg: VG_Ceph_HDD_01

lv: LV_Ceph_HDD_OSD_01

size: 100%FREE

when:

- sdb_info.partitions|length > 0

- (sdb_info.partitions[-1].end + 1048576) < sdb_info.disk.size

Test Logs

Check Rotational

Just to ensure the SSD is correctly differentiated form the HDD.

[root@overdrive-1000 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 465,8G 0 disk

├─sda1 8:1 0 200M 0 part /boot/efi

├─sda2 8:2 0 1G 0 part /boot

└─sda3 8:3 0 60G 0 part

├─VG_OD1000-LV_root 253:0 0 5G 0 lvm /

├─VG_OD1000-LV_swap 253:1 0 512M 0 lvm [SWAP]

├─VG_OD1000-home 253:2 0 5G 0 lvm /home

├─VG_OD1000-var 253:3 0 2G 0 lvm /var

└─VG_OD1000-LV_var_log 253:4 0 1G 0 lvm /var/log

sdb 8:16 0 931,5G 0 disk

[root@overdrive-1000 ~]# cat /sys/block/sda/queue/rotational

0

[root@overdrive-1000 ~]# cat /sys/block/sdb/queue/rotational

1

fio, write, HDD

[root@overdrive-1000 ~]# fio --rw=write --name=write-HDD --filename=/dev/VG_Ceph_HDD_01/LV_Ceph_HDD_OSD_01

write-HDD: (g=0): rw=write, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

fio-3.7

Starting 1 process

Jobs: 1 (f=1): [f(1)][100.0%][r=0KiB/s,w=0KiB/s][r=0,w=0 IOPS][eta 00m:00s]

write-HDD: (groupid=0, jobs=1): err= 0: pid=4645: Mon Mar 25 14:41:58 2019

write: IOPS=41.5k, BW=162MiB/s (170MB/s)(932GiB/5887905msec)

clat (usec): min=6, max=33813, avg=22.67, stdev=451.40

lat (usec): min=6, max=33813, avg=22.91, stdev=451.40

clat percentiles (usec):

| 1.00th=[ 7], 5.00th=[ 7], 10.00th=[ 7], 20.00th=[ 7],

| 30.00th=[ 7], 40.00th=[ 7], 50.00th=[ 7], 60.00th=[ 8],

| 70.00th=[ 8], 80.00th=[ 8], 90.00th=[ 10], 95.00th=[ 10],

| 99.00th=[ 15], 99.50th=[ 17], 99.90th=[11076], 99.95th=[12911],

| 99.99th=[15139]

bw ( KiB/s): min=86736, max=469168, per=99.97%, avg=165837.82, stdev=33870.04, samples=11775

iops : min=21684, max=117292, avg=41459.40, stdev=8467.50, samples=11775

lat (usec) : 10=96.57%, 20=3.22%, 50=0.08%, 100=0.01%, 250=0.01%

lat (usec) : 500=0.01%, 750=0.01%, 1000=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 10=0.01%, 20=0.11%, 50=0.01%

cpu : usr=8.58%, sys=27.87%, ctx=293923, majf=0, minf=117

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,244189184,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: bw=162MiB/s (170MB/s), 162MiB/s-162MiB/s (170MB/s-170MB/s), io=932GiB (1000GB), run=5887905-5887905msec

fio, write, SSD

[root@overdrive-1000 ~]# fio --rw=write --name=write-SSD --filename=/dev/VG_Ceph_SSD_01/LV_Ceph_SSD_OSD_01

write-SSD: (g=0): rw=write, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

fio-3.7

Starting 1 process

Jobs: 1 (f=1): [f(1)][100.0%][r=0KiB/s,w=0KiB/s][r=0,w=0 IOPS][eta 00m:00s]

write-SSD: (groupid=0, jobs=1): err= 0: pid=1758: Mon Mar 25 12:58:06 2019

write: IOPS=76.8k, BW=300MiB/s (315MB/s)(405GiB/1380607msec)

clat (usec): min=6, max=78458, avg=11.71, stdev=229.77

lat (usec): min=6, max=78458, avg=11.94, stdev=229.77

clat percentiles (usec):

| 1.00th=[ 7], 5.00th=[ 7], 10.00th=[ 7], 20.00th=[ 7],

| 30.00th=[ 7], 40.00th=[ 7], 50.00th=[ 7], 60.00th=[ 7],

| 70.00th=[ 8], 80.00th=[ 8], 90.00th=[ 9], 95.00th=[ 10],

| 99.00th=[ 14], 99.50th=[ 16], 99.90th=[ 24], 99.95th=[ 46],

| 99.99th=[11469]

bw ( KiB/s): min=265920, max=486264, per=99.99%, avg=307217.88, stdev=33450.30, samples=2761

iops : min=66480, max=121566, avg=76804.45, stdev=8362.58, samples=2761

lat (usec) : 10=97.05%, 20=2.82%, 50=0.09%, 100=0.01%, 250=0.01%

lat (usec) : 500=0.01%, 750=0.01%, 1000=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 10=0.01%, 20=0.04%, 50=0.01%

lat (msec) : 100=0.01%

cpu : usr=15.14%, sys=50.50%, ctx=47218, majf=0, minf=36

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,106052608,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: bw=300MiB/s (315MB/s), 300MiB/s-300MiB/s (315MB/s-315MB/s), io=405GiB (434GB), run=1380607-1380607msec

Articles Found on the Web

Here’s a few articles (German and English) on the Opteron A1100 aka Seattle that I read. Maybe they are of interest to you too.