Installing cephadm on Fedora Server

Table of Contents

I want a playground for Ceph’s cephadm that was introduced with Octopus and is also present in Pacific.

So I cleanly took my QNAP TS-473A out of my existing Ceph Nautilus cluster again

(because I have enough combined capacity on my F5-422 nodes to be able to remove the OSDs in the TS-473A)

and installed Fedora Server 35 plus cephadm from upstream.

This is just a quick braindump on how to get that done, playing with Ceph Pacific is for a later post.

Install Fedora Server

I use a QNAP TS-473A for my tests, but the below applies to any machine or VM running Fedora. Installation and initial configuration is described in a separate post. While that specific post is about Fedora Server 35, expect the installation method to be just as painless with versions later than 35 when they come out.

Install cephadm

Was done manually, as per https://docs.ceph.com/en/pacific/cephadm/install/#distribution-specific-installations, for now.

[root@ts-473a-01 ~]# dnf --assumeyes install cephadm

Last metadata expiration check: 1:29:04 ago on 2022-01-30T19:55:29 CET.

Dependencies resolved.

=========================================================================================================

Package Architecture Version Repository Size

=========================================================================================================

Installing:

cephadm noarch 2:16.2.7-2.fc35 updates 78 k

Transaction Summary

=========================================================================================================

Install 1 Package

Total download size: 78 k

Installed size: 312 k

Downloading Packages:

cephadm-16.2.7-2.fc35.noarch.rpm 351 kB/s | 78 kB 00:00

---------------------------------------------------------------------------------------------------------

Total 75 kB/s | 78 kB 00:01

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Running scriptlet: cephadm-2:16.2.7-2.fc35.noarch 1/1

Installing : cephadm-2:16.2.7-2.fc35.noarch 1/1

Running scriptlet: cephadm-2:16.2.7-2.fc35.noarch 1/1

Verifying : cephadm-2:16.2.7-2.fc35.noarch 1/1

Installed:

cephadm-2:16.2.7-2.fc35.noarch

Complete!

Bootstrap Cluster

I specify --cluster-network 192.168.30.0/24 because I have a separate network card for the cluster network,

10 GbE and with an MTU of 9000.

I specify --single-host-defaults because I just want to play with cephadm and Ceph Pacific, my other nodes (4x TerraMaster F5-422)

currently run Ceph Nautilus, specifically Red Hat Ceph Storage 4.

I specify --allow-fqdn-hostname because of section 3.8.1. Recommended cephadm bootstrap command options in the RHCS5 Installation Guide

and section Fully qualified domain names vs bare host names of the latest (not Pacific) Ceph Docs.

I’ll see if these choices are wise, this is a playground for now.

[root@ts-473a-01 ~]# hostname

ts-473a-01.internal.pcfe.net

[root@ts-473a-01 ~]# hostname -s

ts-473a-01

[root@ts-473a-01 ~]# for i in internal storage ceph ; do host ts-473a-01.${i}.pcfe.net;done

ts-473a-01.internal.pcfe.net has address 192.168.50.185

ts-473a-01.storage.pcfe.net has address 192.168.40.185

ts-473a-01.ceph.pcfe.net has address 192.168.30.185

[ceph: root@ts-473a-01 /]# ip r s

default via 192.168.50.254 dev enp6s0 proto static metric 101

192.168.30.0/24 dev enp2s0f0np0 proto kernel scope link src 192.168.30.185 metric 100

192.168.40.0/24 dev enp5s0 proto kernel scope link src 192.168.40.185 metric 102

192.168.50.0/24 dev enp6s0 proto kernel scope link src 192.168.50.185 metric 101

[root@ts-473a-01 ~]# cephadm bootstrap \

--mon-ip 192.168.40.185 \

--cluster-network 192.168.30.0/24 \

--single-host-defaults \

--allow-fqdn-hostname

Click to show the output of cephadm bootstrap.

Verifying podman|docker is present...

Verifying lvm2 is present...

Verifying time synchronization is in place...

Unit chronyd.service is enabled and running

Repeating the final host check...

podman (/usr/bin/podman) version 3.4.4 is present

systemctl is present

lvcreate is present

Unit chronyd.service is enabled and running

Host looks OK

Cluster fsid: e8727796-820d-11ec-b81e-245ebe4b8fc0

Verifying IP 192.168.40.185 port 3300 ...

Verifying IP 192.168.40.185 port 6789 ...

Mon IP `192.168.40.185` is in CIDR network `192.168.40.0/24`

Adjusting default settings to suit single-host cluster...

Pulling container image quay.io/ceph/ceph:v16...

Ceph version: ceph version 16.2.7 (dd0603118f56ab514f133c8d2e3adfc983942503) pacific (stable)

Extracting ceph user uid/gid from container image...

Creating initial keys...

Creating initial monmap...

Creating mon...

firewalld ready

Enabling firewalld service ceph-mon in current zone...

Waiting for mon to start...

Waiting for mon...

mon is available

Assimilating anything we can from ceph.conf...

Generating new minimal ceph.conf...

Restarting the monitor...

Setting mon public_network to 192.168.40.0/24

Setting cluster_network to 192.168.30.0/24

Wrote config to /etc/ceph/ceph.conf

Wrote keyring to /etc/ceph/ceph.client.admin.keyring

Creating mgr...

Verifying port 9283 ...

firewalld ready

Enabling firewalld service ceph in current zone...

firewalld ready

Enabling firewalld port 9283/tcp in current zone...

Waiting for mgr to start...

Waiting for mgr...

mgr not available, waiting (1/15)...

mgr not available, waiting (2/15)...

mgr not available, waiting (3/15)...

mgr is available

Enabling cephadm module...

Waiting for the mgr to restart...

Waiting for mgr epoch 5...

mgr epoch 5 is available

Setting orchestrator backend to cephadm...

Generating ssh key...

Wrote public SSH key to /etc/ceph/ceph.pub

Adding key to root@localhost authorized_keys...

Adding host ts-473a-01.internal.pcfe.net...

Deploying mon service with default placement...

Deploying mgr service with default placement...

Deploying crash service with default placement...

Deploying prometheus service with default placement...

Deploying grafana service with default placement...

Deploying node-exporter service with default placement...

Deploying alertmanager service with default placement...

Enabling the dashboard module...

Waiting for the mgr to restart...

Waiting for mgr epoch 9...

mgr epoch 9 is available

Generating a dashboard self-signed certificate...

Creating initial admin user...

Fetching dashboard port number...

firewalld ready

Enabling firewalld port 8443/tcp in current zone...

Ceph Dashboard is now available at:

URL: https://ts-473a-01.internal.pcfe.net:8443/

User: admin

Password: [REDACTED]

Enabling client.admin keyring and conf on hosts with "admin" label

You can access the Ceph CLI with:

sudo /usr/sbin/cephadm shell --fsid e8727796-820d-11ec-b81e-245ebe4b8fc0 -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

Please consider enabling telemetry to help improve Ceph:

ceph telemetry on

For more information see:

https://docs.ceph.com/docs/pacific/mgr/telemetry/

Bootstrap complete.

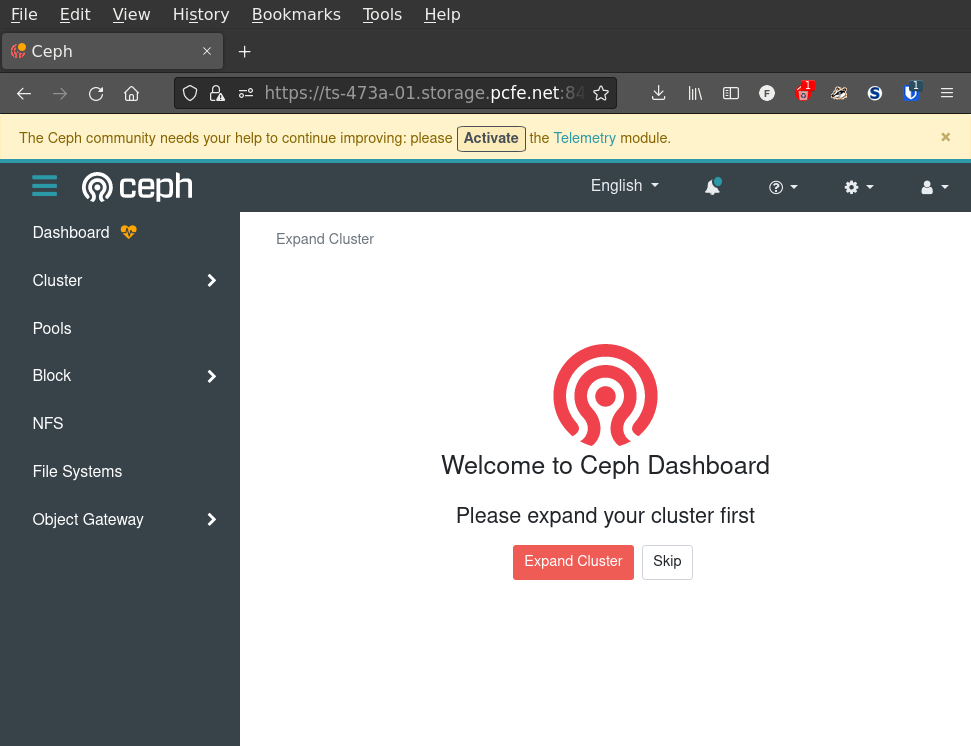

After bootstrap completed, I pointed FireFox (on my workstation) at the node, changed my password (as reasonably enforced by the webUI) and logged in.

Ceph Dashboard Screenshot

This was taken right aster loggin in.

Cephadm Shell

Also just a quick smoke test if it is functional, as you can see I have not yet set up OSDs, pools etc.

[root@ts-473a-01 ~]# cat /etc/os-release

NAME="Fedora Linux"

VERSION="35 (Server Edition)"

ID=fedora

VERSION_ID=35

VERSION_CODENAME=""

PLATFORM_ID="platform:f35"

PRETTY_NAME="Fedora Linux 35 (Server Edition)"

ANSI_COLOR="0;38;2;60;110;180"

LOGO=fedora-logo-icon

CPE_NAME="cpe:/o:fedoraproject:fedora:35"

HOME_URL="https://fedoraproject.org/"

DOCUMENTATION_URL="https://docs.fedoraproject.org/en-US/fedora/f35/system-administrators-guide/"

SUPPORT_URL="https://ask.fedoraproject.org/"

BUG_REPORT_URL="https://bugzilla.redhat.com/"

REDHAT_BUGZILLA_PRODUCT="Fedora"

REDHAT_BUGZILLA_PRODUCT_VERSION=35

REDHAT_SUPPORT_PRODUCT="Fedora"

REDHAT_SUPPORT_PRODUCT_VERSION=35

PRIVACY_POLICY_URL="https://fedoraproject.org/wiki/Legal:PrivacyPolicy"

VARIANT="Server Edition"

VARIANT_ID=server

While I could just use cephadm shell, I used the full command provided by the bootstrap output.

[root@ts-473a-01 ~]# /usr/sbin/cephadm shell --fsid e8727796-820d-11ec-b81e-245ebe4b8fc0 -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

Using recent ceph image quay.io/ceph/ceph@sha256:bb6a71f7f481985f6d3b358e3b9ef64c6755b3db5aa53198e0aac38be5c8ae54

[ceph: root@ts-473a-01 /]# ceph -s

cluster:

id: e8727796-820d-11ec-b81e-245ebe4b8fc0

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 2

services:

mon: 1 daemons, quorum ts-473a-01.internal.pcfe.net (age 44m)

mgr: ts-473a-01.internal.pcfe.net.xsqvam(active, since 42m), standbys: ts-473a-01.mybqwb

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

[ceph: root@ts-473a-01 /]# exit

That’s all for this post, it’s late, the w-e is nearly over and I am hungry.