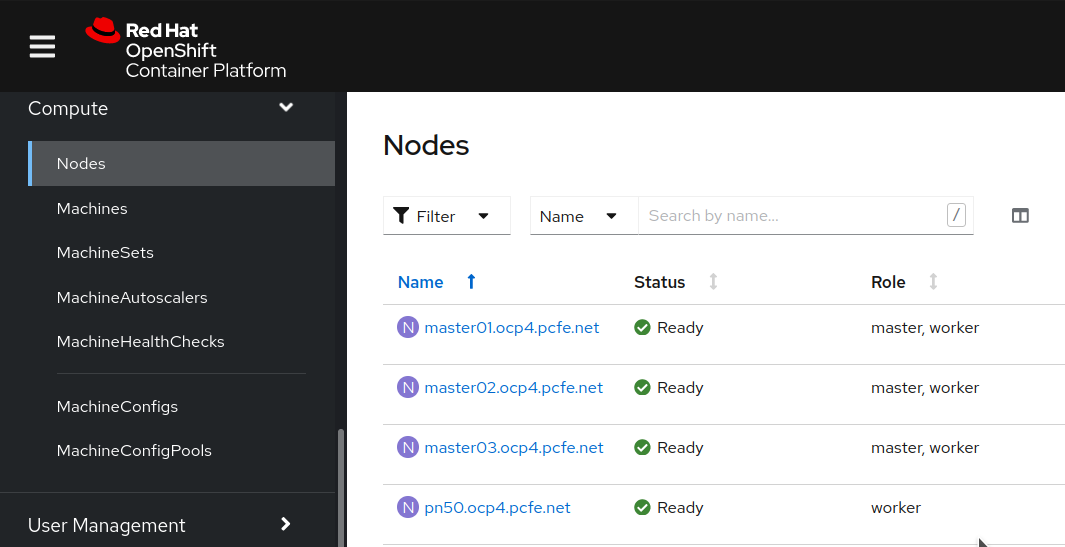

ASUS PN50 as worker node in OpenShift Container Platform

Table of Contents

After upgrading the memory of my ASUS PN50 to 64 Gib, it was installed as an OpenShift Container Platform (OCP4) worker node.

This is NOT an OCP4 Install Guide

In our homelab, I do infrastructure and Janine does OCP4. As such, this blog post does not tell you how to install OCP4. This is a post about adding a PN50 as worker node to an existing cluster. This only documents what I did on the infrastructure side; hardware choices, UEFI settings, PXE target and a workaround for RHBZ #1839923: Very slow initial boot of rhcos installer kernel and initramfs …

Why

While we already had an OpenShift Container Platform 4.8 cluster made from 3 VMs (courtesy of Janine), we wanted to use the PN50 as an additional worker node, so that we can use OpenShift Virtualization.

Since my dreambox DM900 ultraHD is still our main PVR, it was OK to repurpose the PN50 from MythTV duties to OCP4 worker node duties.

This post is my braindump.

Additional Hardware

In addition to the 6 CPU core PN50 I already owned, I maxed out the RAM (non-ECC though) and added a second network interface.

If the node was doing serious work, we would have gone for the more expensive ECC RAM and the PN50 (or PN51) with the 8 core CPU.

64 GiB RAM

I went for a G.Skill Ripjaws 2 x 32 GB kit (F4-3200C22D-64GRS), because the price was OK, the kit has the right specifications plus it is listed in the PN50’s tested memory list.

[root@localhost-live ~]# lshw -class memory -sanitize

[...]

*-memory

description: System Memory

physical id: 31

slot: System board or motherboard

size: 64GiB

*-bank:0

description: SODIMM DDR4 Synchronous Unbuffered (Unregistered) 3200 MHz (0,3 ns)

product: F4-3200C22-32GRS

vendor: Unknown

physical id: 0

serial: [REMOVED]

slot: DIMM 0

size: 32GiB

width: 64 bits

clock: 3200MHz (0.3ns)

*-bank:1

description: SODIMM DDR4 Synchronous Unbuffered (Unregistered) 3200 MHz (0,3 ns)

product: F4-3200C22-32GRS

vendor: Unknown

physical id: 1

serial: [REMOVED]

slot: DIMM 0

size: 32GiB

width: 64 bits

clock: 3200MHz (0.3ns)

[...]

Second Network Interface

When I bought the PN50 last year, in my geographical location, only the single NIC model was available.

But I wanted to have a second network interface for storage traffic to my homelab Ceph cluster,

so I purchased an Anker USB-C to Ethernet Adapter.

While I chose this particular model because it is small, the vendor bothered listing Linux as compatible OS and I’ve been happy with

Anker as a brand, I expect any other USB to Ethernet dongle that works with kernel-4.18.0 to have been OK for this usecase.

In lsusb, it shows as

Bus … Device …: ID 0bda:8153 Realtek Semiconductor Corp. RTL8153 Gigabit Ethernet Adapter

And in lshw it shows up as

[...]

*-usbhost:1

product: xHCI Host Controller

vendor: Linux 5.11.12-300.fc34.x86_64 xhci-hcd

physical id: 1

bus info: usb@4

logical name: usb4

version: 5.11

capabilities: usb-3.10

configuration: driver=hub slots=2 speed=10000Mbit/s

*-usb

description: Generic USB device

product: USB 10/100/1000 LAN

vendor: Realtek

physical id: 1

bus info: usb@4:1

version: 30.00

serial: [REMOVED]

capabilities: usb-3.00

configuration: driver=r8152 maxpower=288mA speed=5000Mbit/s

[...]

Firmware (aka BIOS) Update to 0623

(this was actually done while the PN50 was still running F34)

While I was hoping that on a mid-2020 released, UEFI enabled, machine I could comfortably apply vendor “BIOS updates” through fwupd, as of 2021-08-07, ASUS did not (yet) seem to have it in LVFS. Seriously ASUS, you can do better.

So I updated to the 0623 firmware by;

- downloading a zip from the vendor

- ensuring

/dev/nvme0n1p2is mounted at/boot/efi/ - unzipping to

/boot/efi/EFI/(I could also have extracted it to a USB stick) - entering uefi (aka BIOS) setup by pressing Del during power on self test (POST)

- choosing Tool / Start ASUS EzFlash

- selecting the extracted

PN50-ASUS-….CAP - letting the tool apply the upgrade

Firmware Settings

Are close but not identical to what I used when the PN50 was used for for MythTV,

especially Secure Boot, which I had to disable (as rhcos-4.8.2-x86_64-live-kernel-x86_64 failed the signature test) for PXE installation.

Once the worker node is installd. I can boot with Secure Boot enabled just fine. Since we do not use any of WiFi, Bluetooth, IR when the PN50 serves as an OCP4 worker node, those were disabled.

I changed the following from the shipped defaults (note that some options, e.g. detailed Trusted Computing options, only show up after save & reset of the parent option);

- Advanced / Trusted Computing: Enabled

- Advanced / Network Stack Configuration / Network Stack: Enabled

- Advanced / Network Stack Configuration / Ipv4 PXE Suport: Enabled

- Advanced / Network Stack Configuration / Ipv6 PXE Suport: Enabled

- Advanced / Onboard Devices Configuration / LAN: Enabled

- Advanced / Onboard Devices Configuration: all others (WLAN, BT, CIR, HDMI CEC) Disabled

- Advanced / APM / Restore AC Power Loss: Last State

- Advanced / APM / Power On By PCI-E: Enabled

- Advanced / Platform Configuration / PSS Support: Enabled

- Advanced / Platform Configuration / SVM Mode: Enabled

- Boot / Boot Configuration / Boot Logo Display: Full Screen

- Boot / Boot Configuration / Wait for ‘F1’ If Error: Disabled

- Boot / Boot Configuration / Fast Boot: Disabled

- Boot / Secure Boot: Enabled (but only after rhcos was installed)

Some of these are for my comfort (e.g. PXE), others are because I have no plans to run Windows on this hardware and thus no need for Windows specific dumb downs of the options (e.g. boot logo size).

Notes

- If your PXE setup does not serve signed files, leave Secure Boot disabled until you finished installing. It’s OK to enable this after installation, Red Hat CoreOS has all the needed bits signed.

- MCTP and DASH are off in the default settings, while I did enable them after upgrading to version 0416 and later ones, these two setting do not seem to stick (others like e.g. enabling PXE boot or setting last state for AC state after power loss do stick). Every time I look on a subsequent visit to the (currently 0623) firmware settings they are again Disabled.

PXE Installation

I presume installation from ISO would work too but I saw no reason to bother plugging USB media in when I have a known working PXE setup that serves me well.

For PXE installation, I grabbed the needed files from https://mirror.openshift.com/, putting the kernel and initrd on the PXE server and live rootfs on the fileserver.

Download commands used (click to expand details).

[pcfe@fileserver ~]$ curl -O https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/latest/4.8.2/rhcos-4.8.2-x86_64-live-kernel-x86_64

[pcfe@fileserver ~]$ curl -O https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/latest/4.8.2/rhcos-4.8.2-x86_64-live-initramfs.x86_64.img

[pcfe@fileserver ~]$ curl -O https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/latest/4.8.2/rhcos-4.8.2-x86_64-live-rootfs.x86_64.img

[pcfe@fileserver ~]$ curl -O https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/latest/4.8.2/sha256sum.txt

[pcfe@fileserver ~]$ sha256sum -c sha256sum.txt

[pcfe@fileserver ~]$ scp rhcos-4.8.2-x86_64-live-initramfs.x86_64.img pxeserver:/var/lib/tftpboot/images/rhcos-4.8.2/

[pcfe@fileserver ~]$ scp rhcos-4.8.2-x86_64-live-kernel-x86_64 pxeserver:/var/lib/tftpboot/images/rhcos-4.8.2/

Now I had to instruct the Red Hat CoreOS (rhcos) installer to

- install to the NVMe with

install_dev=nvme0n1 - use the correct DNS with

nameserver=192.168.50.248 - configure the on-board network interface (

enp2s0f0) with IP192.168.70.8, netmask255.255.255.0, gateway192.168.70.1and a hostname - configure the USB network interface (

enp5s0f3u1) with IP192.168.40.11, netmask255.255.255.0, no gateway, no hostname - use the on-board NIC (

enp2s0f0) for network booting withbootdev=enp2s0f0

On my PXE server, I used the following entry in /var/lib/tftpboot/uefi/grub.cfg:

menuentry 'Install PN50 as OCP4 worker node' --class fedora --class gnu-linux --class gnu --class os {

linuxefi images/rhcos-4.8.2/rhcos-4.8.2-x86_64-live-kernel-x86_64 coreos.inst=yes coreos.inst.install_dev=nvme0n1 coreos.inst.ignition_url=http://fileserver.internal.pcfe.net/~jeichler/cluster-ocp4/worker.ign coreos.live.rootfs_url=http://fileserver.internal.pcfe.net/ftp/redhat/CoreOS/4.8.2/rhcos-4.8.2-x86_64-live-rootfs.x86_64.img rd.neednet=1 nameserver=192.168.50.248 ip=192.168.70.8::192.168.70.1:255.255.255.0:pn50.ocp4.pcfe.net:enp2s0f0:none ip=192.168.40.11:::255.255.255.0::enp5s0f3u1:none bootdev=enp2s0f0

initrdefi images/rhcos-4.8.2/rhcos-4.8.2-x86_64-live-initramfs.x86_64.img

}

Workaround for Slow Boot

NOTE:: This work around is no longer needed on OCP 4.12.

With rhcos 4.8.2 on the PN50, I ran into RHBZ #1839923: Very slow initial boot of rhcos installer kernel and initramfs … aka issue #567, remove console=ttyS0 on metal.

To work around that;

- do a PXE install as described above

- after rhcos finishes the initial touching of the disk and tries to boot from internal storage, interrupt grub before the timeout and remove the

console=ttyS0,115200n8statement from the kernel line - once the PN50 finished booting and is reachable on the network, ssh in with

ssh core@pn50.ocp4.pcfe.netand then usesudo rpm-ostree kargs --delete 'console=ttyS0,115200n8'(I found that here)

Cost

As I expect people to wonder how much this sums up to;

| € | what |

|---|---|

| 472 | 1 x ASUS PN50-BR037MD, Mini-PC with 6 core AMD Ryzen 5 4500U (in September 2020) |

| ~ 40 | 1 x nvme0n1 238.5G (I had a spare on the shelf) |

| 250 | 2 x 32 GiB SO-DIMM |

| 17 | 1 x USB-C to Ethernet Adapter |

So just shy of 780€.

Ceph RBD Storage Performance Smoke Test

From an earlier blog post I know that my Ceph cluster can outperform a 1 Gigabit network connection one some tests (the linked tests were run on a hypervisor that has 2x 10 Gigabit network). But certainly not by such a wide margin that there would be value in attaching a > 1 Gigabit network interface to the PN50 for storage access.

I just wanted to see if on read only and write only tests I could get half decent numbers without any tuning of the VM. The Ceph cluster and the PN50 being consumer hardware, I do not expect great numbers, just checking if testing CNV gives me OKish disk performance for some test installs.

Prepare for fio

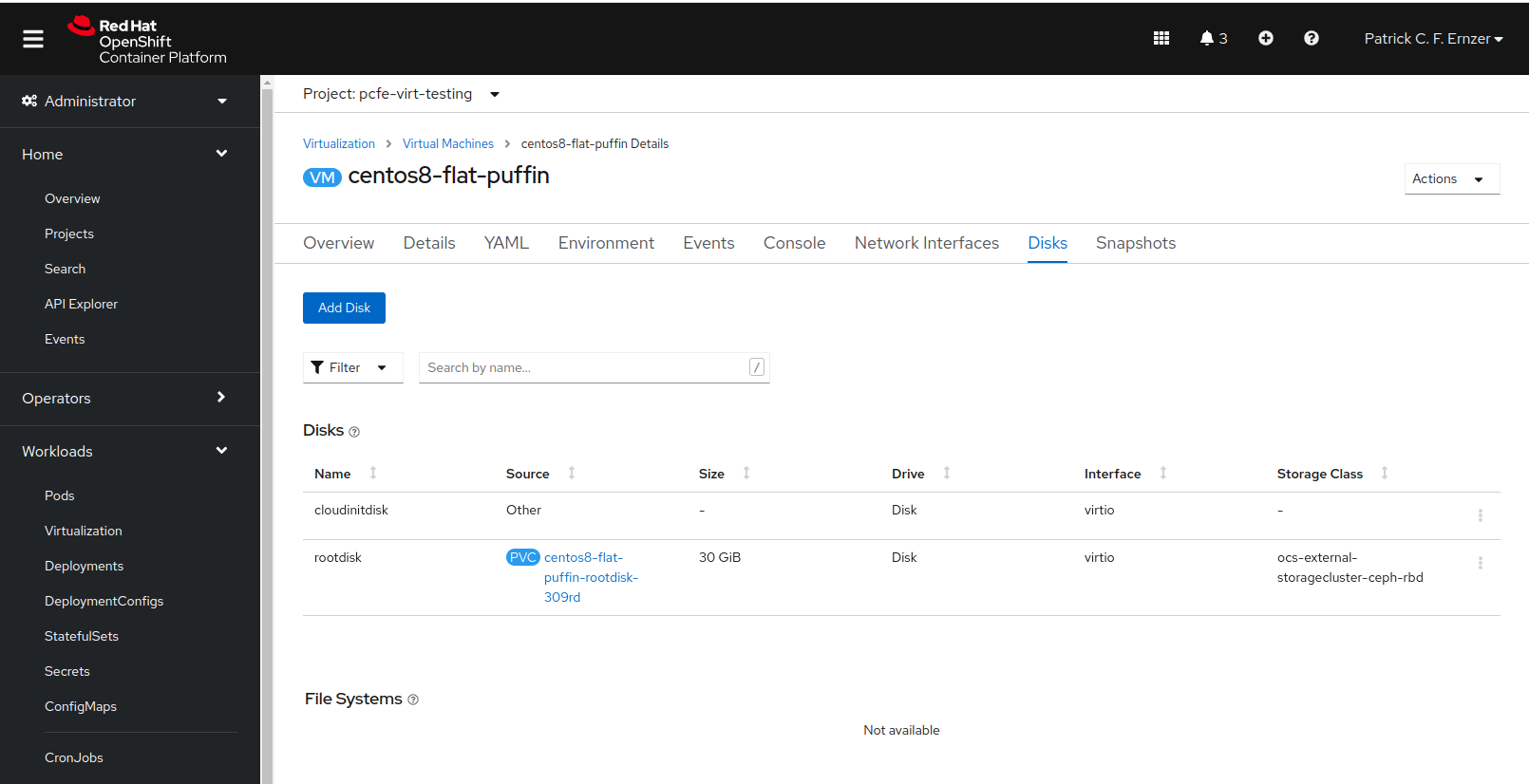

Instantiated a VM of type CentOS 8.0+, flavour Small: 1 CPU | 2 GiB memory and workload profile server, because I am doing a smoke test, not in-depth performance measurements. The VM was given a 30 GiB disk on Ceph RBD.

Since the VM has 2 GiB RAM and a bit more then 28 GiB free space,

a test size of 12 GiB seemed reasonable for a quick fio smoke test.

click to see fio preparation details

pcfe@workstation ~ $ ssh centos@pn50.ocp4.pcfe.net -p 32662

[centos@centos8-flat-puffin ~]$ sudo -i

[root@centos8-flat-puffin ~]# dnf install tmux fio bash-completion

[root@centos8-flat-puffin ~]# mkdir /tmp/fiotest

[root@centos8-flat-puffin ~]# df -h /tmp/fiotest/

Filesystem Size Used Avail Use% Mounted on

/dev/vda1 30G 1,7G 29G 6% /

[root@centos8-flat-puffin ~]# free -h

total used free shared buff/cache available

Mem: 1,8Gi 153Mi 1,2Gi 8,0Mi 452Mi 1,5Gi

Swap: 0B 0B 0B

Sequential Read, 4k Blocksize

READ: bw=95.7MiB/siops: avg=24538.82

fio --name=banana --rw=read --size=12g --directory=/tmp/fiotest/ --bs=4k # click to see full fio output

[root@centos8-flat-puffin ~]# fio --name=banana --rw=read --size=12g --directory=/tmp/fiotest/ --bs=4k

banana: (g=0): rw=read, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

fio-3.19

Starting 1 process

banana: Laying out IO file (1 file / 12288MiB)

Jobs: 1 (f=1): [R(1)][100.0%][r=112MiB/s][r=28.6k IOPS][eta 00m:00s]

banana: (groupid=0, jobs=1): err= 0: pid=13646: Tue Aug 24 13:58:05 2021

read: IOPS=24.5k, BW=95.7MiB/s (100MB/s)(12.0GiB/128339msec)

clat (nsec): min=591, max=330175k, avg=40227.04, stdev=1859857.09

lat (nsec): min=651, max=330175k, avg=40312.57, stdev=1859856.88

clat percentiles (nsec):

| 1.00th=[ 732], 5.00th=[ 740], 10.00th=[ 756],

| 20.00th=[ 772], 30.00th=[ 780], 40.00th=[ 804],

| 50.00th=[ 812], 60.00th=[ 852], 70.00th=[ 1128],

| 80.00th=[ 1176], 90.00th=[ 1208], 95.00th=[ 1320],

| 99.00th=[ 1768], 99.50th=[ 5984], 99.90th=[ 69120],

| 99.95th=[ 24248320], 99.99th=[105381888]

bw ( KiB/s): min=39813, max=131072, per=100.00%, avg=98156.33, stdev=15883.26, samples=255

iops : min= 9953, max=32768, avg=24538.82, stdev=3970.74, samples=255

lat (nsec) : 750=5.04%, 1000=63.07%

lat (usec) : 2=31.21%, 4=0.12%, 10=0.18%, 20=0.14%, 50=0.12%

lat (usec) : 100=0.01%, 250=0.01%, 500=0.01%, 750=0.01%, 1000=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 10=0.01%, 20=0.01%, 50=0.02%

lat (msec) : 100=0.02%, 250=0.01%, 500=0.01%

cpu : usr=1.87%, sys=3.33%, ctx=2716, majf=0, minf=15

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=3145728,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=95.7MiB/s (100MB/s), 95.7MiB/s-95.7MiB/s (100MB/s-100MB/s), io=12.0GiB (12.9GB), run=128339-128339msec

Disk stats (read/write):

vda: ios=12485/7, merge=17/0, ticks=1640535/282, in_queue=1640817, util=97.87%

Sequential Write, 4k Blocksize

WRITE: bw=74.4MiB/siops: avg=19203.96

fio --name=banana --rw=write --size=12g --directory=/tmp/fiotest/ --bs=4k # click to see full fio output

[root@centos8-flat-puffin ~]# fio --name=banana --rw=write --size=12g --directory=/tmp/fiotest/ --bs=4k

banana: (g=0): rw=write, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

fio-3.19

Starting 1 process

Jobs: 1 (f=1): [W(1)][100.0%][w=23.1MiB/s][w=5919 IOPS][eta 00m:00s]

banana: (groupid=0, jobs=1): err= 0: pid=13729: Tue Aug 24 14:17:06 2021

write: IOPS=19.1k, BW=74.4MiB/s (78.1MB/s)(12.0GiB/165068msec); 0 zone resets

clat (nsec): min=1533, max=832092k, avg=51587.45, stdev=1233941.59

lat (nsec): min=1613, max=832093k, avg=51706.46, stdev=1233941.93

clat percentiles (nsec):

| 1.00th=[ 1592], 5.00th=[ 1624], 10.00th=[ 1640],

| 20.00th=[ 1688], 30.00th=[ 1736], 40.00th=[ 1896],

| 50.00th=[ 2640], 60.00th=[ 2736], 70.00th=[ 2800],

| 80.00th=[ 2960], 90.00th=[ 3472], 95.00th=[ 4960],

| 99.00th=[ 23424], 99.50th=[ 51456], 99.90th=[12779520],

| 99.95th=[16908288], 99.99th=[23199744]

bw ( KiB/s): min= 56, max=182240, per=100.00%, avg=76816.71, stdev=43186.68, samples=326

iops : min= 14, max=45560, avg=19203.96, stdev=10796.69, samples=326

lat (usec) : 2=42.55%, 4=50.13%, 10=5.80%, 20=0.45%, 50=0.55%

lat (usec) : 100=0.08%, 250=0.01%, 500=0.01%, 750=0.01%, 1000=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 10=0.26%, 20=0.14%, 50=0.01%

lat (msec) : 100=0.01%, 250=0.01%, 500=0.01%, 750=0.01%, 1000=0.01%

cpu : usr=1.99%, sys=5.14%, ctx=13332, majf=0, minf=15

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,3145728,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: bw=74.4MiB/s (78.1MB/s), 74.4MiB/s-74.4MiB/s (78.1MB/s-78.1MB/s), io=12.0GiB (12.9GB), run=165068-165068msec

Disk stats (read/write):

vda: ios=3/12471, merge=0/28, ticks=203/10250337, in_queue=10250540, util=81.88%

Sequential, 80% Read, 20% Write, 4k Blocksize

READ: bw=75.5MiB/siops: avg=19367.05WRITE: bw=18.9MiB/siops: avg=4842.16

fio --name=banana --rw=readwrite --rwmixread=80 --size=12g --directory=/tmp/fiotest/ --bs=4k # click to see full fio output

[root@centos8-flat-puffin ~]# fio --name=banana --rw=readwrite --rwmixread=80 --size=12g --directory=/tmp/fiotest/ --bs=4k

banana: (g=0): rw=rw, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

fio-3.19

Starting 1 process

Jobs: 1 (f=1): [M(1)][99.2%][r=104MiB/s,w=25.6MiB/s][r=26.6k,w=6549 IOPS][eta 00m:01s]

banana: (groupid=0, jobs=1): err= 0: pid=13733: Tue Aug 24 14:21:29 2021

read: IOPS=19.3k, BW=75.5MiB/s (79.2MB/s)(9830MiB/130156msec)

clat (nsec): min=601, max=542291k, avg=50272.00, stdev=2442581.61

lat (nsec): min=671, max=542291k, avg=50351.67, stdev=2442581.78

clat percentiles (nsec):

| 1.00th=[ 740], 5.00th=[ 764], 10.00th=[ 764],

| 20.00th=[ 780], 30.00th=[ 788], 40.00th=[ 812],

| 50.00th=[ 820], 60.00th=[ 860], 70.00th=[ 924],

| 80.00th=[ 1176], 90.00th=[ 1256], 95.00th=[ 1384],

| 99.00th=[ 1896], 99.50th=[ 6240], 99.90th=[ 54528],

| 99.95th=[ 29491200], 99.99th=[130547712]

bw ( KiB/s): min= 4096, max=118784, per=100.00%, avg=77468.95, stdev=22451.84, samples=259

iops : min= 1024, max=29696, avg=19367.05, stdev=5612.91, samples=259

write: IOPS=4834, BW=18.9MiB/s (19.8MB/s)(2458MiB/130156msec); 0 zone resets

clat (nsec): min=1171, max=3271.2k, avg=2410.37, stdev=7125.23

lat (nsec): min=1251, max=3271.3k, avg=2513.17, stdev=7239.56

clat percentiles (nsec):

| 1.00th=[ 1320], 5.00th=[ 1608], 10.00th=[ 1640], 20.00th=[ 1688],

| 30.00th=[ 1720], 40.00th=[ 1752], 50.00th=[ 1816], 60.00th=[ 1960],

| 70.00th=[ 2352], 80.00th=[ 2800], 90.00th=[ 3152], 95.00th=[ 3760],

| 99.00th=[ 9792], 99.50th=[20608], 99.90th=[34560], 99.95th=[43264],

| 99.99th=[76288]

bw ( KiB/s): min= 1056, max=30272, per=100.00%, avg=19369.53, stdev=5659.15, samples=259

iops : min= 264, max= 7568, avg=4842.16, stdev=1414.77, samples=259

lat (nsec) : 750=0.99%, 1000=59.59%

lat (usec) : 2=31.09%, 4=7.03%, 10=0.84%, 20=0.20%, 50=0.18%

lat (usec) : 100=0.01%, 250=0.01%, 500=0.01%, 750=0.01%, 1000=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 10=0.01%, 20=0.01%, 50=0.02%

lat (msec) : 100=0.02%, 250=0.01%, 500=0.01%, 750=0.01%

cpu : usr=1.91%, sys=3.86%, ctx=2155, majf=0, minf=21

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=2516533,629195,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=75.5MiB/s (79.2MB/s), 75.5MiB/s-75.5MiB/s (79.2MB/s-79.2MB/s), io=9830MiB (10.3GB), run=130156-130156msec

WRITE: bw=18.9MiB/s (19.8MB/s), 18.9MiB/s-18.9MiB/s (19.8MB/s-19.8MB/s), io=2458MiB (2577MB), run=130156-130156msec

Disk stats (read/write):

vda: ios=10023/2478, merge=17/14, ticks=1559575/1130581, in_queue=2690157, util=93.08%

fio Smoke Test Summary

Still OKish for quick test VMs considering how lightweight my Ceph cluster is and that I only have a 1 Gigabit uplink from the PN50 to it.

But for any serious workload this does not cut the mustard, especially on mixed disk access.

On e.g. dnf upgrade, the VM spends way too much time in iowait for my taste.